Model Cards provide a framework for transparent, responsible reporting.

Use the vetiver `.qmd` Quarto template as a place to start,

with vetiver.model_card()

Writing pin:

Name: 'wd-gb'

Version: 20251124T032542Z-343baGradient Boost

Before moving forward with the to-do list, let’s throw a Random Forest to it.

Gradient boost

For many reasons, Random Forest is usually a very good baseline model. In this particular case I started with the polynomial OLS as baseline model, just because it was so evident from the correlations that the relationship between temperature and consumption follows a polynomial shape. But let’s go back to a beloved RF.

⏩ stepit 'gb_raw': Starting execution of `strom.modelling.assess_model()` 2025-11-24 03:25:42 ⏩ stepit 'get_single_split_metrics': Starting execution of `strom.modelling.get_single_split_metrics()` 2025-11-24 03:25:42 ✅ stepit 'get_single_split_metrics': Successfully completed and cached [exec time 0.0 seconds, cache time 0.0 seconds, size 1.0 KB] `strom.modelling.get_single_split_metrics()` 2025-11-24 03:25:42 ♻️ stepit 'cross_validate_pipe': is up-to-date. Using cached result for `strom.modelling.cross_validate_pipe()` 2025-11-24 03:25:42 ✅ stepit 'gb_raw': Successfully completed and cached [exec time 0.2 seconds, cache time 0.0 seconds, size 142.6 KB] `strom.modelling.assess_model()` 2025-11-24 03:25:42

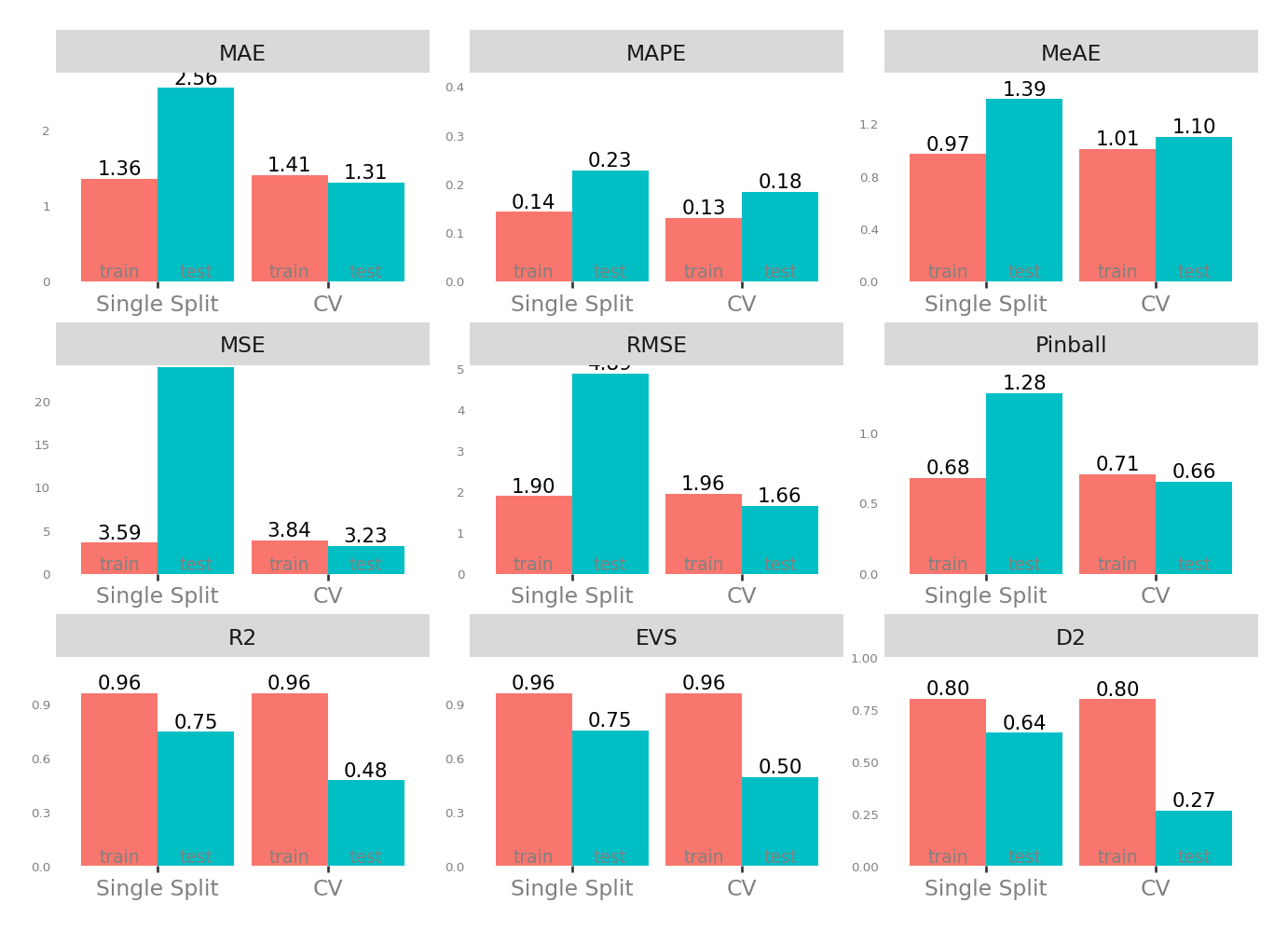

Metrics

| Single Split | CV | |||

|---|---|---|---|---|

| train | test | test | train | |

| MAE - Mean Absolute Error | 1.364678 | 2.563777 | 1.310741 | 1.412537 |

| MSE - Mean Squared Error | 3.592260 | 23.954699 | 3.232546 | 3.839411 |

| RMSE - Root Mean Squared Error | 1.895326 | 4.894354 | 1.660649 | 1.959309 |

| R2 - Coefficient of Determination | 0.961459 | 0.746361 | 0.477251 | 0.961105 |

| MAPE - Mean Absolute Percentage Error | 0.143197 | 0.228165 | 0.184038 | 0.130849 |

| EVS - Explained Variance Score | 0.961459 | 0.754822 | 0.495584 | 0.961105 |

| MeAE - Median Absolute Error | 0.968555 | 1.386748 | 1.100589 | 1.007152 |

| D2 - D2 Absolute Error Score | 0.802989 | 0.639577 | 0.267363 | 0.800863 |

| Pinball - Mean Pinball Loss | 0.682339 | 1.281889 | 0.655371 | 0.706268 |

Scatter plot matrix

Observed vs. Predicted and Residuals vs. Predicted

Check for …

check the residuals to assess the goodness of fit.

- white noise or is there a pattern?

- heteroscedasticity?

- non-linearity?

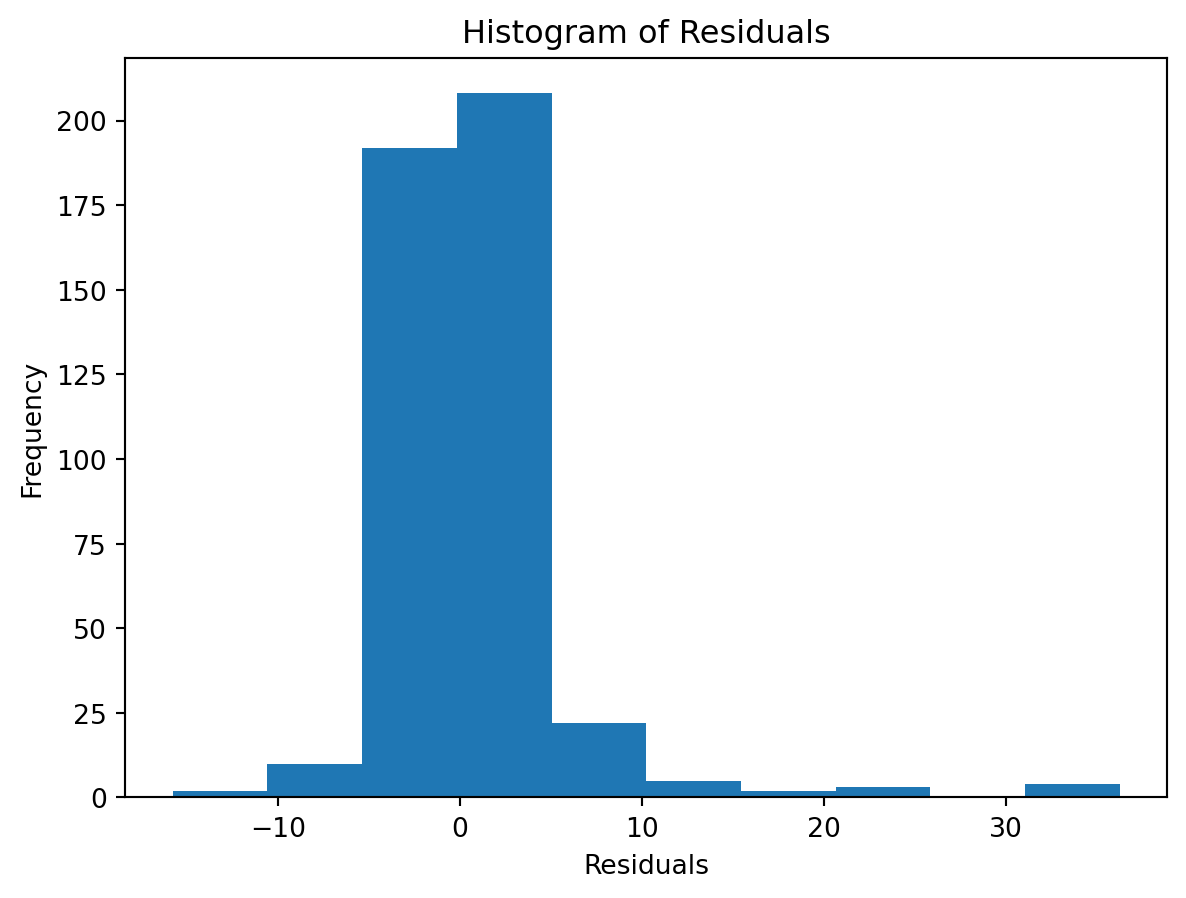

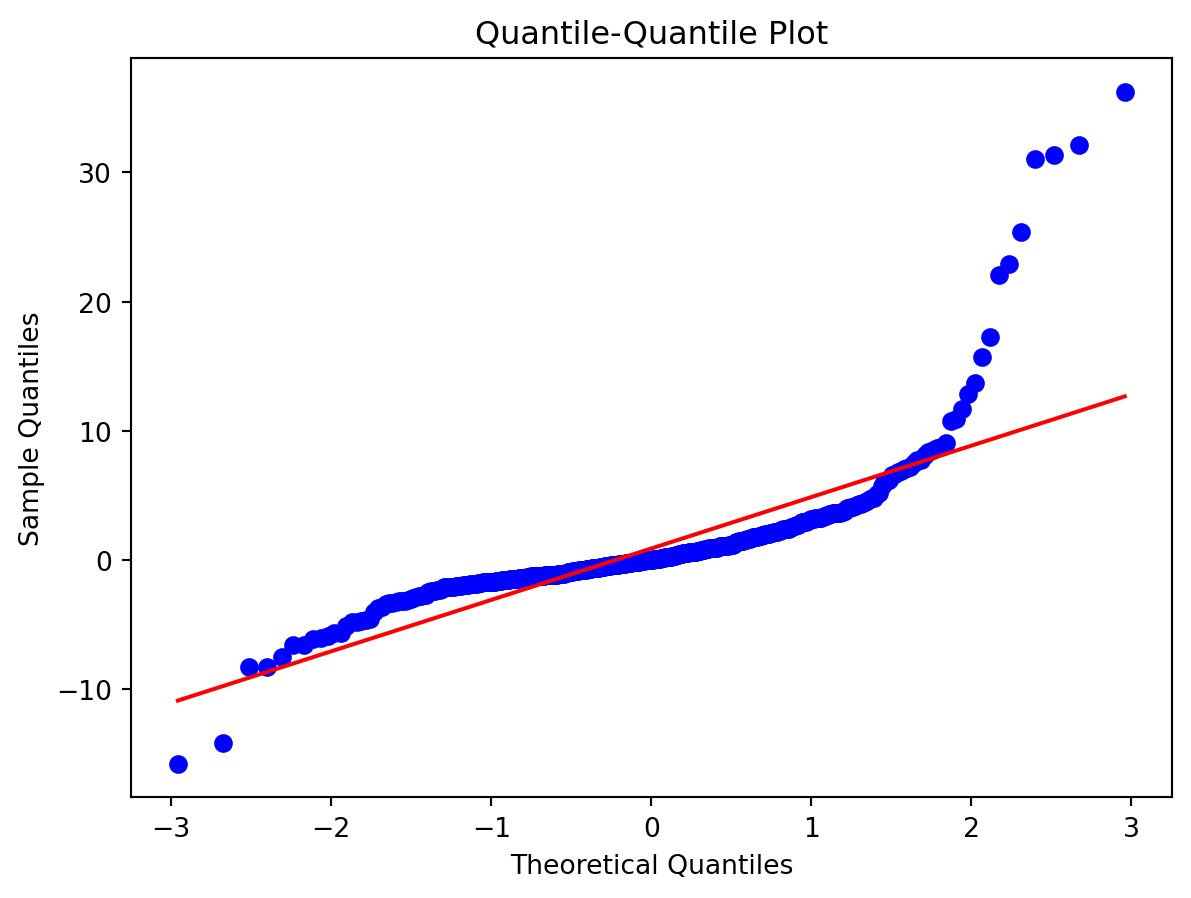

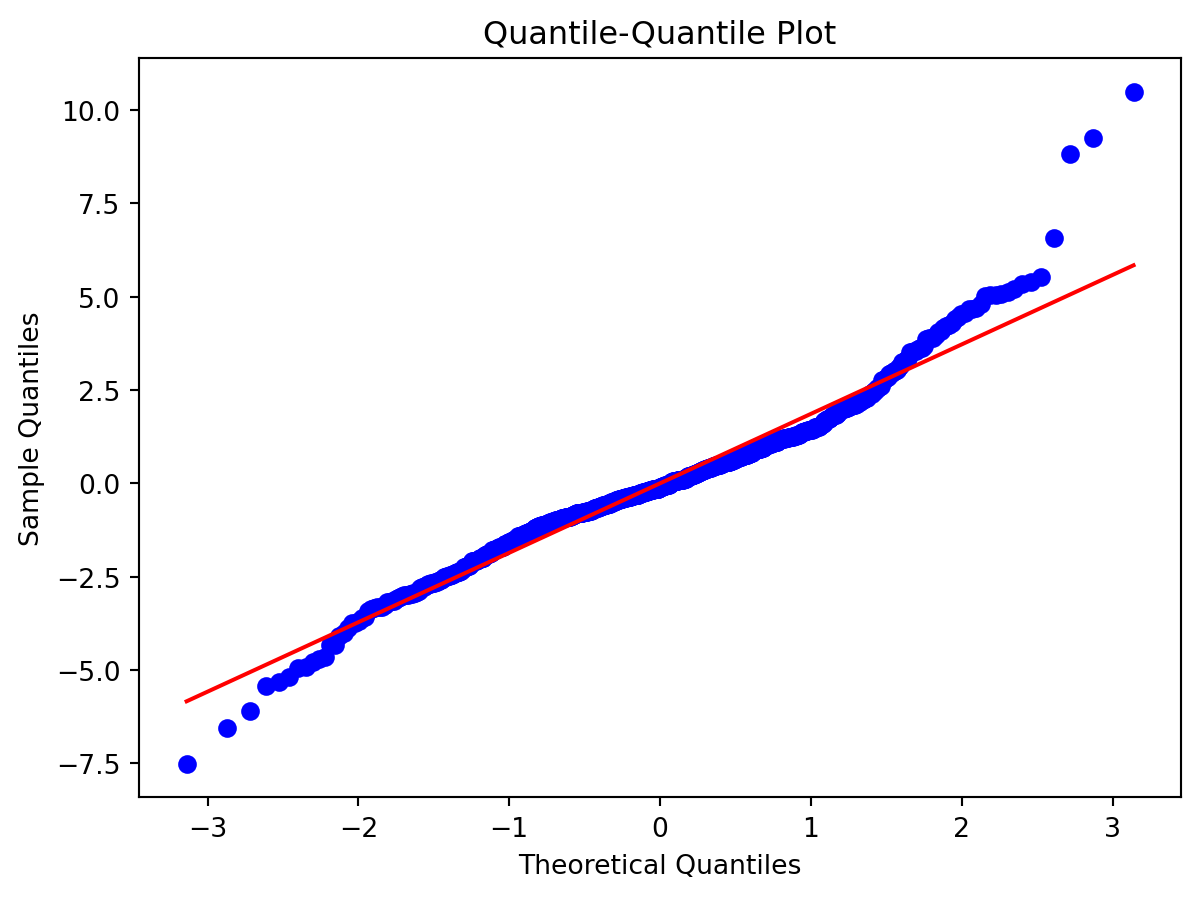

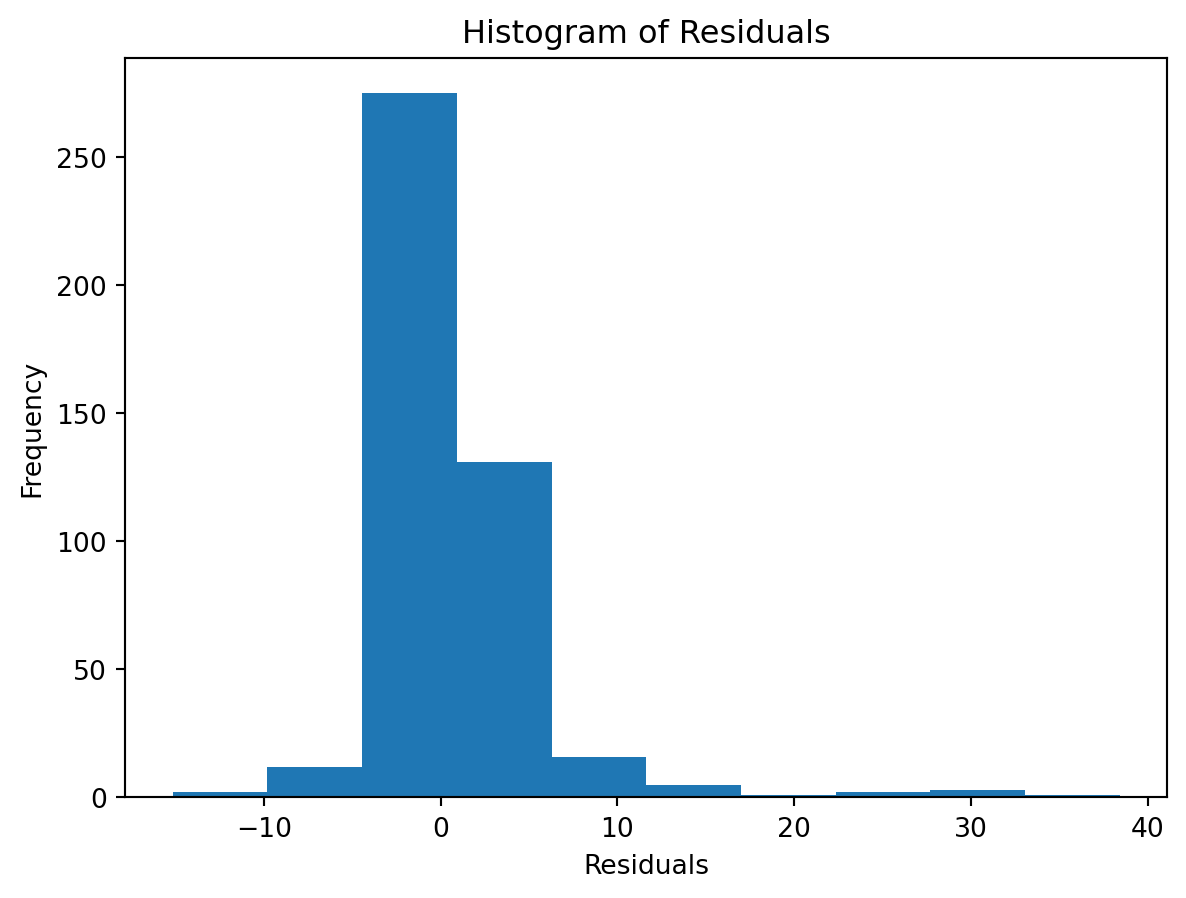

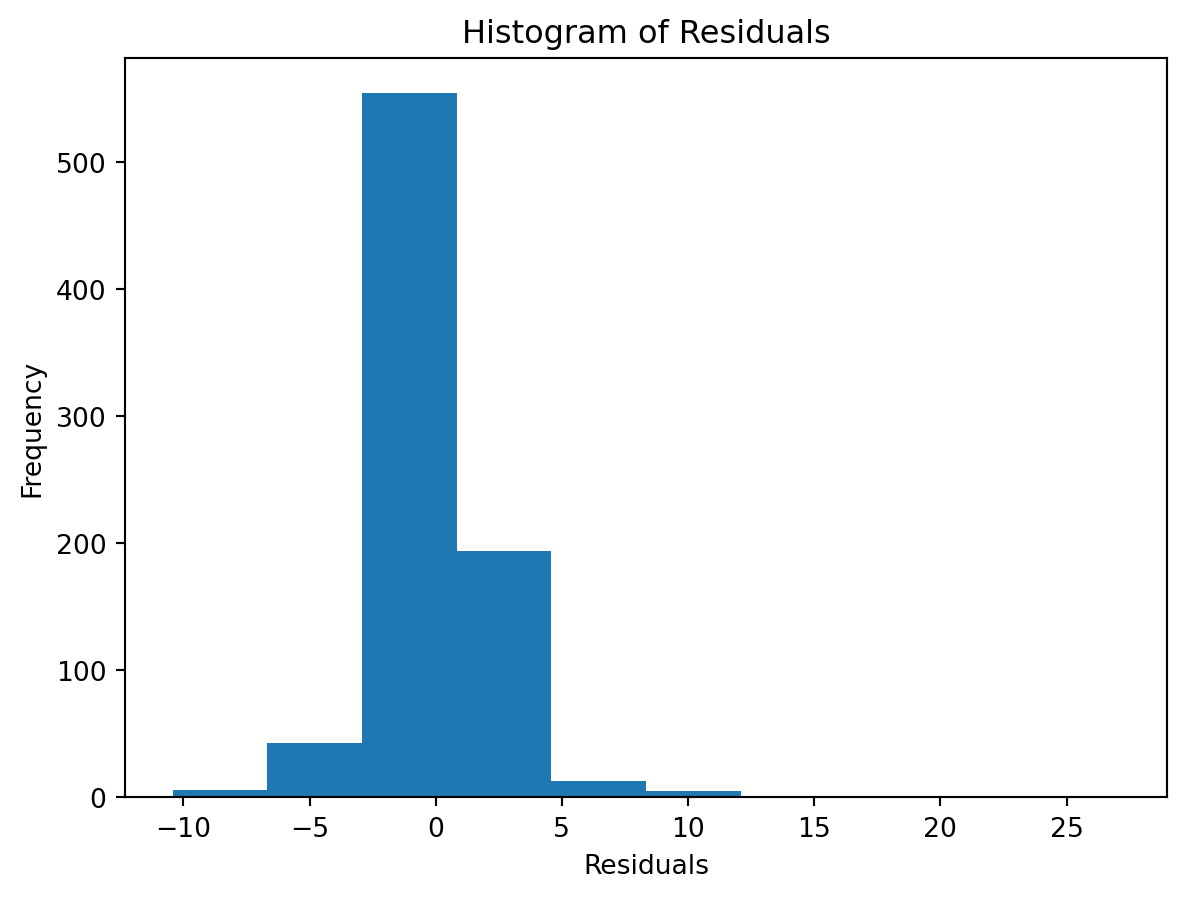

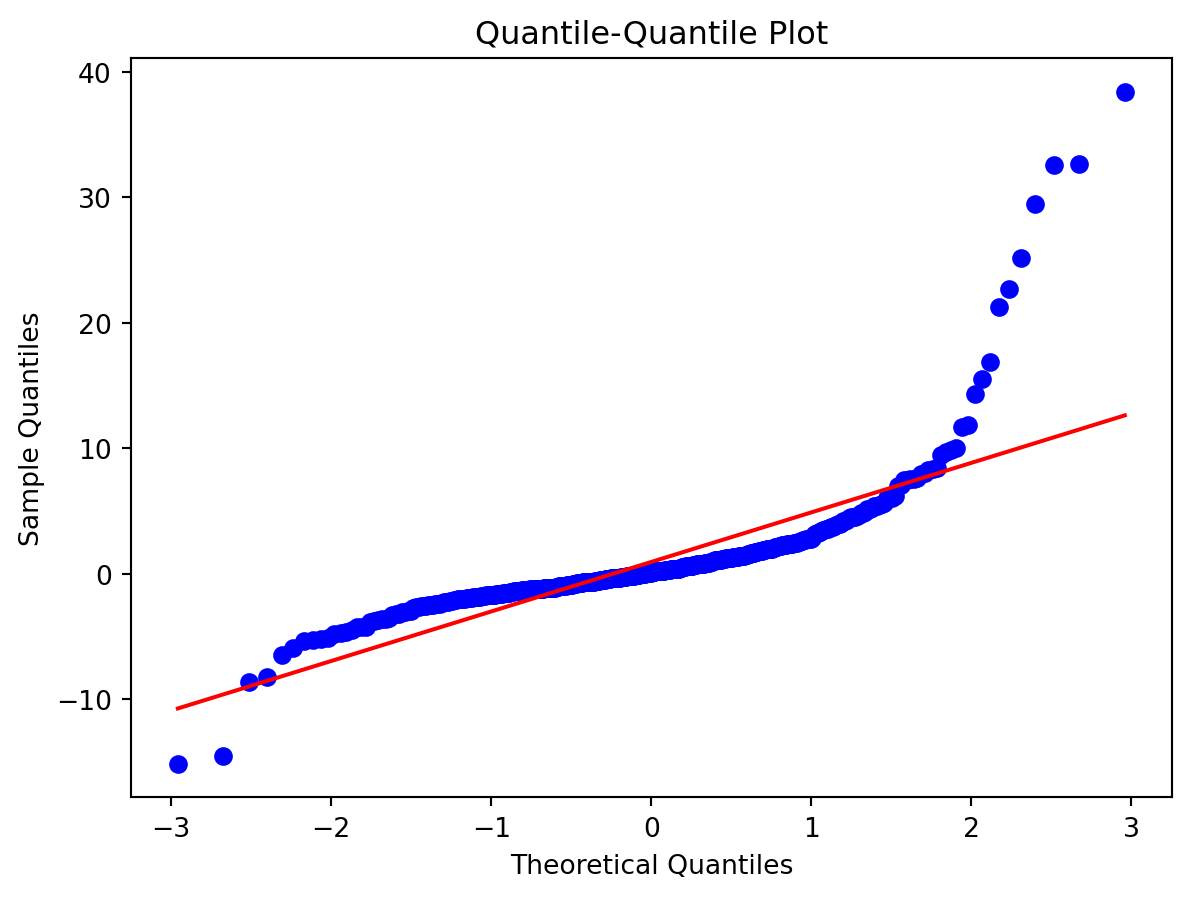

Normality of Residuals:

Check for …

- Are residuals normally distributed?

Leverage

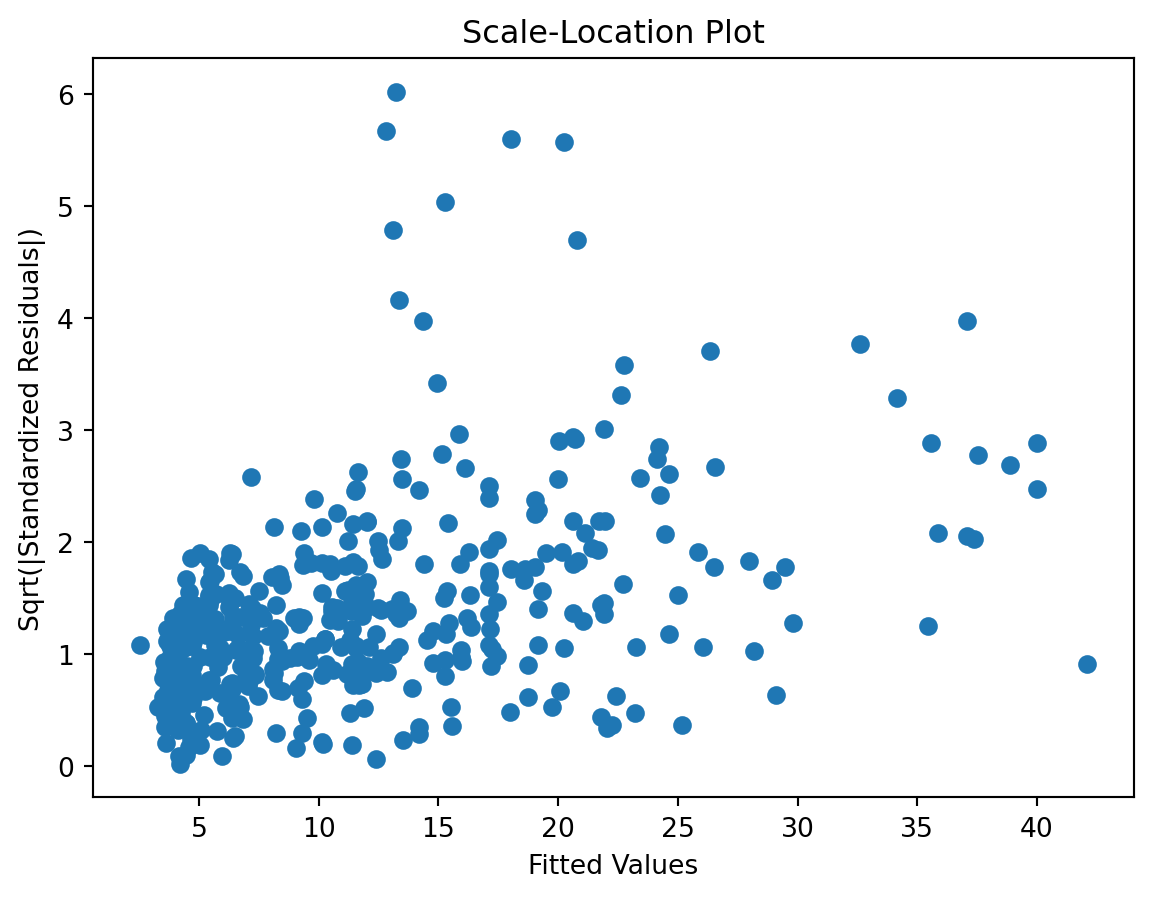

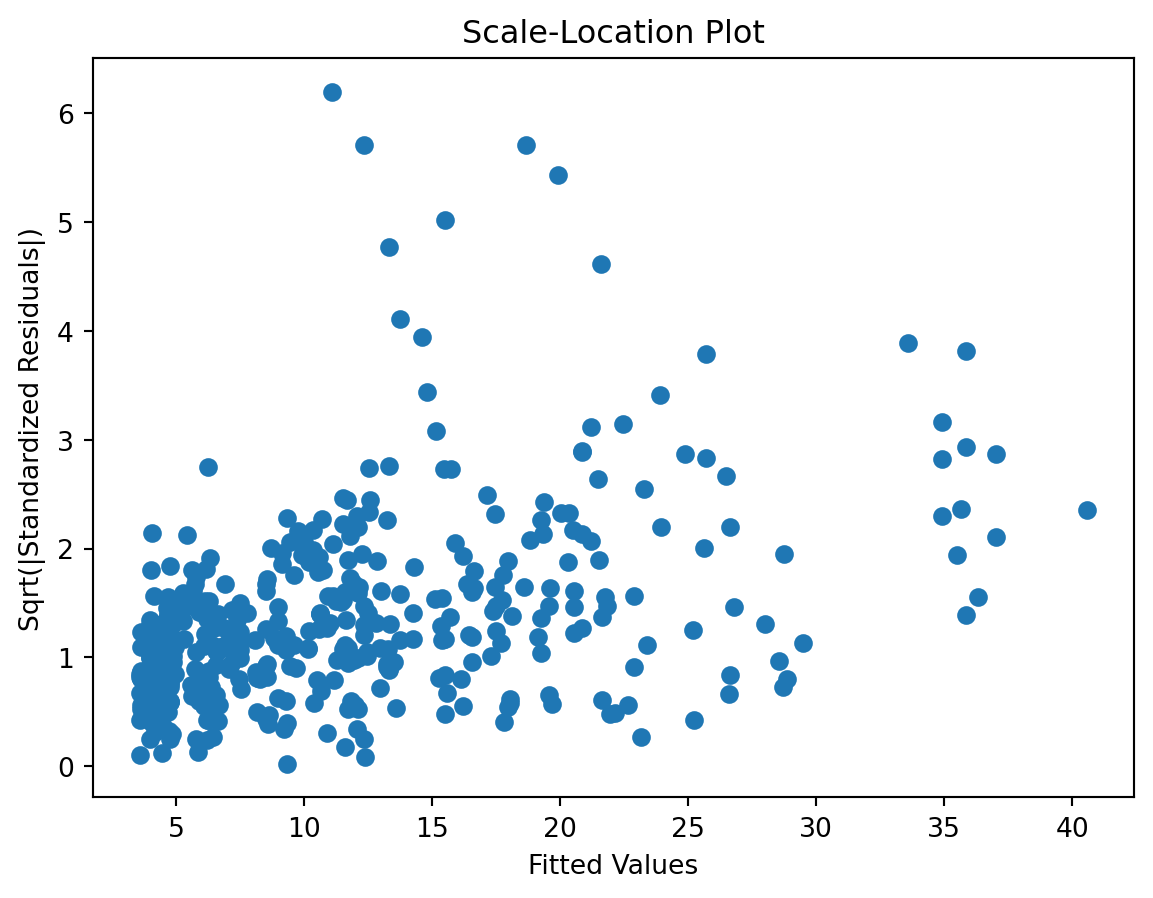

Scale-Location plot

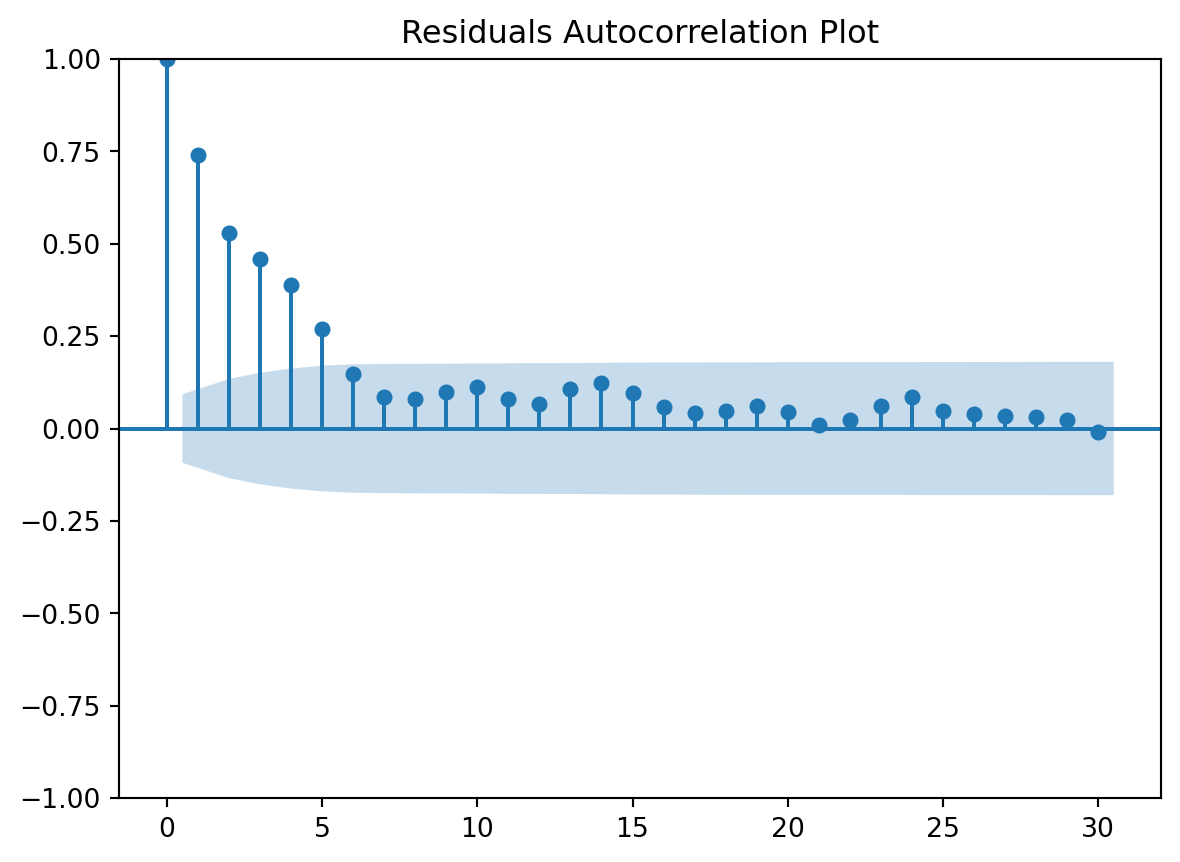

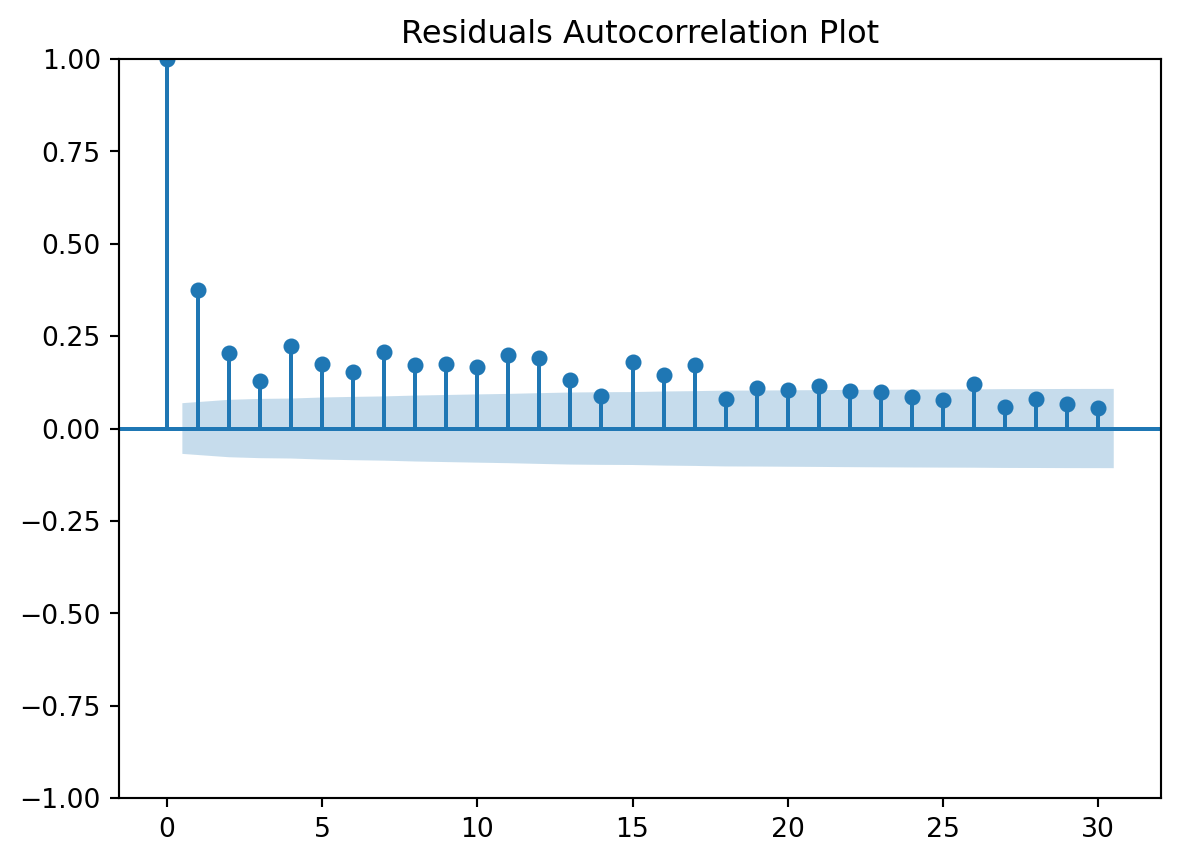

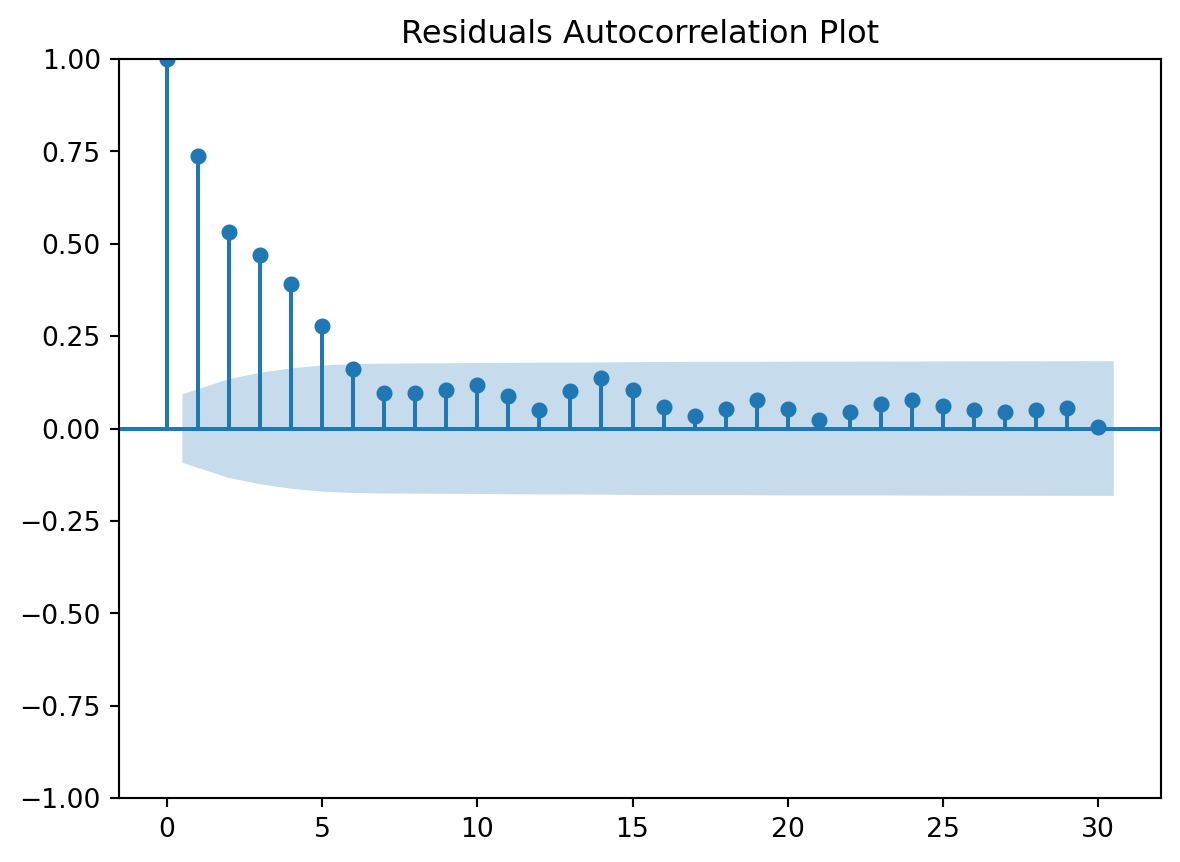

Residuals Autocorrelation Plot

Residuals vs Time

Again, overfits a lot.

Parameter: param_model__learning_rate

Parameter: param_model__max_depth

Parameter: param_model__min_samples_leaf

Parameter: param_model__min_samples_split

Parameter: param_model__n_estimators

Parameter: param_model__subsample

Parameter: param_vars__columns

Best model

{'model__learning_rate': 0.1,

'model__max_depth': 5,

'model__min_samples_leaf': 5,

'model__min_samples_split': 48,

'model__n_estimators': 60,

'model__subsample': 1,

'vars__columns': ['tt_tu_mean', 'td_mean']}⏩ stepit 'gb_tuned': Starting execution of `strom.modelling.assess_model()` 2025-11-24 03:25:50 ⏩ stepit 'get_single_split_metrics': Starting execution of `strom.modelling.get_single_split_metrics()` 2025-11-24 03:25:50 ✅ stepit 'get_single_split_metrics': Successfully completed and cached [exec time 0.0 seconds, cache time 0.0 seconds, size 1.0 KB] `strom.modelling.get_single_split_metrics()` 2025-11-24 03:25:50 ♻️ stepit 'cross_validate_pipe': is up-to-date. Using cached result for `strom.modelling.cross_validate_pipe()` 2025-11-24 03:25:50 ✅ stepit 'gb_tuned': Successfully completed and cached [exec time 0.1 seconds, cache time 0.0 seconds, size 150.2 KB] `strom.modelling.assess_model()` 2025-11-24 03:25:50

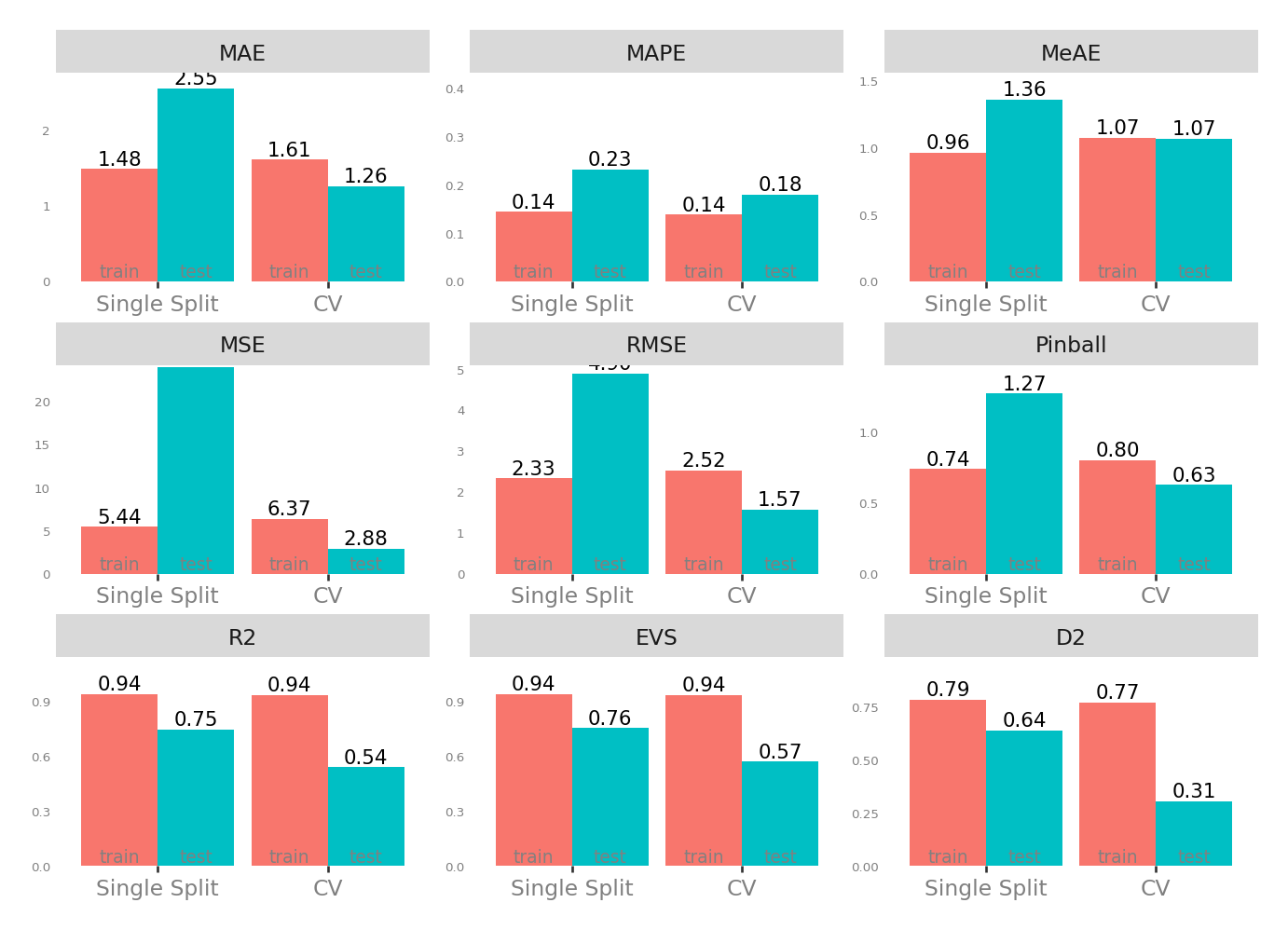

Metrics

| Single Split | CV | |||

|---|---|---|---|---|

| train | test | test | train | |

| MAE - Mean Absolute Error | 1.481329 | 2.548565 | 1.255343 | 1.607755 |

| MSE - Mean Squared Error | 5.438492 | 24.000544 | 2.884752 | 6.369723 |

| RMSE - Root Mean Squared Error | 2.332058 | 4.899035 | 1.572622 | 2.522672 |

| R2 - Coefficient of Determination | 0.941651 | 0.745876 | 0.540709 | 0.935623 |

| MAPE - Mean Absolute Percentage Error | 0.144094 | 0.232144 | 0.179743 | 0.138839 |

| EVS - Explained Variance Score | 0.941651 | 0.755293 | 0.570550 | 0.935623 |

| MeAE - Median Absolute Error | 0.962382 | 1.360189 | 1.069666 | 1.074011 |

| D2 - D2 Absolute Error Score | 0.786149 | 0.641716 | 0.306742 | 0.773475 |

| Pinball - Mean Pinball Loss | 0.740665 | 1.274283 | 0.627672 | 0.803877 |

Scatter plot matrix

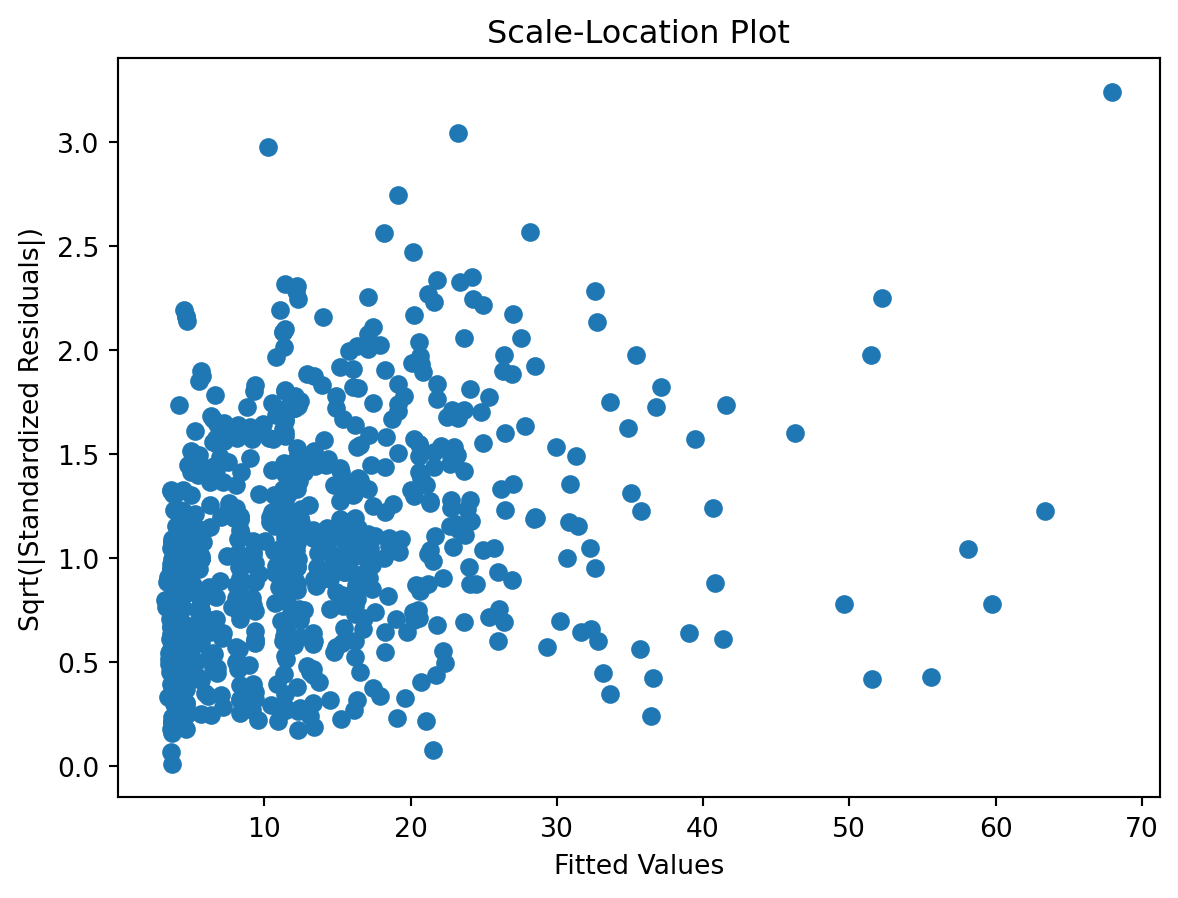

Observed vs. Predicted and Residuals vs. Predicted

Check for …

check the residuals to assess the goodness of fit.

- white noise or is there a pattern?

- heteroscedasticity?

- non-linearity?

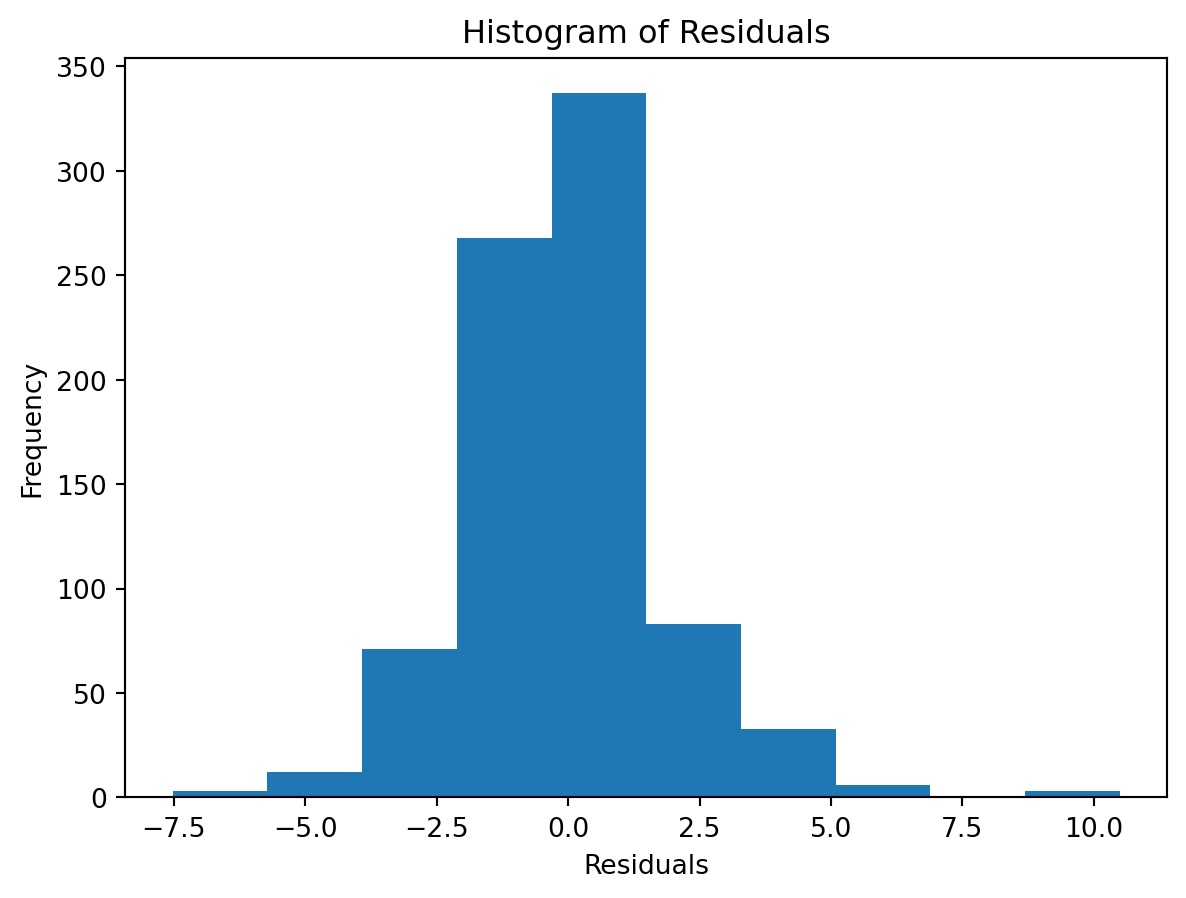

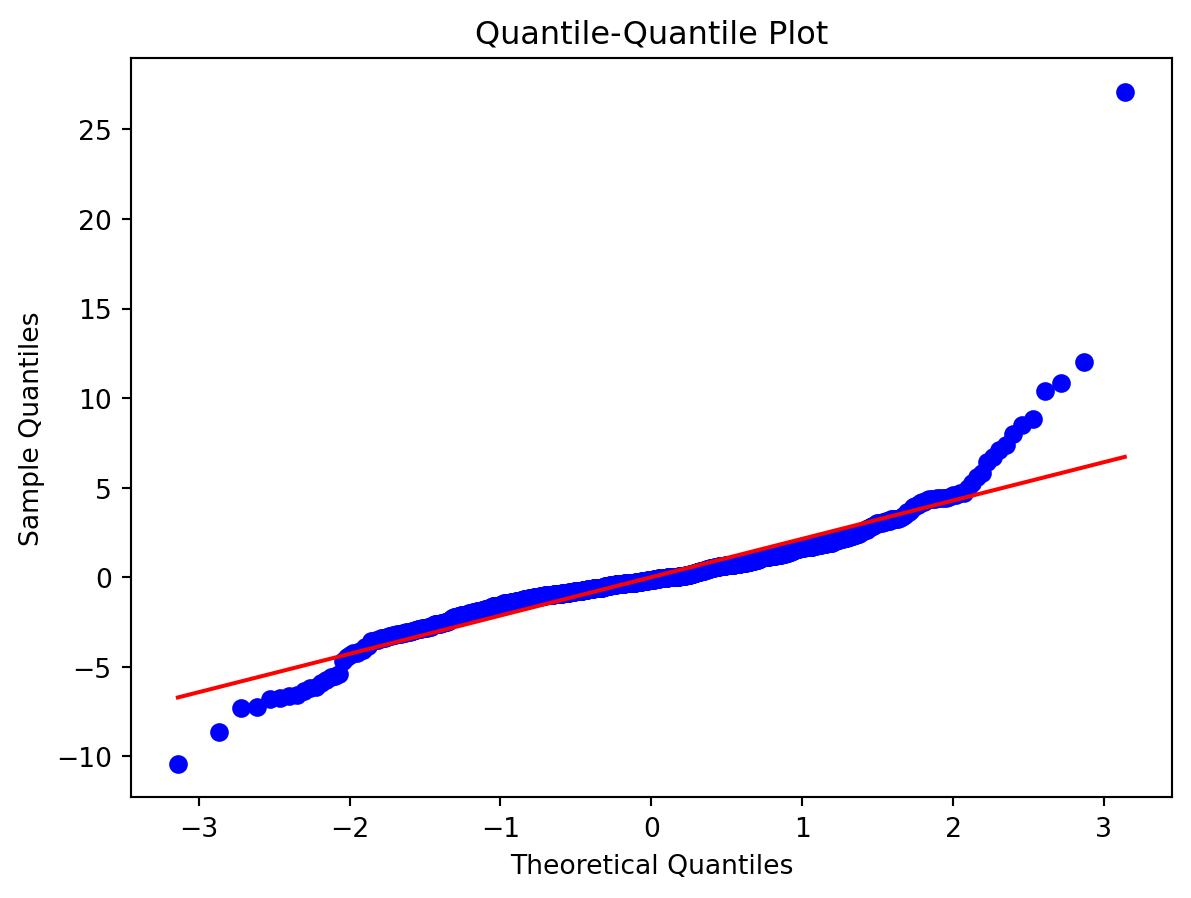

Normality of Residuals:

Check for …

- Are residuals normally distributed?

Leverage

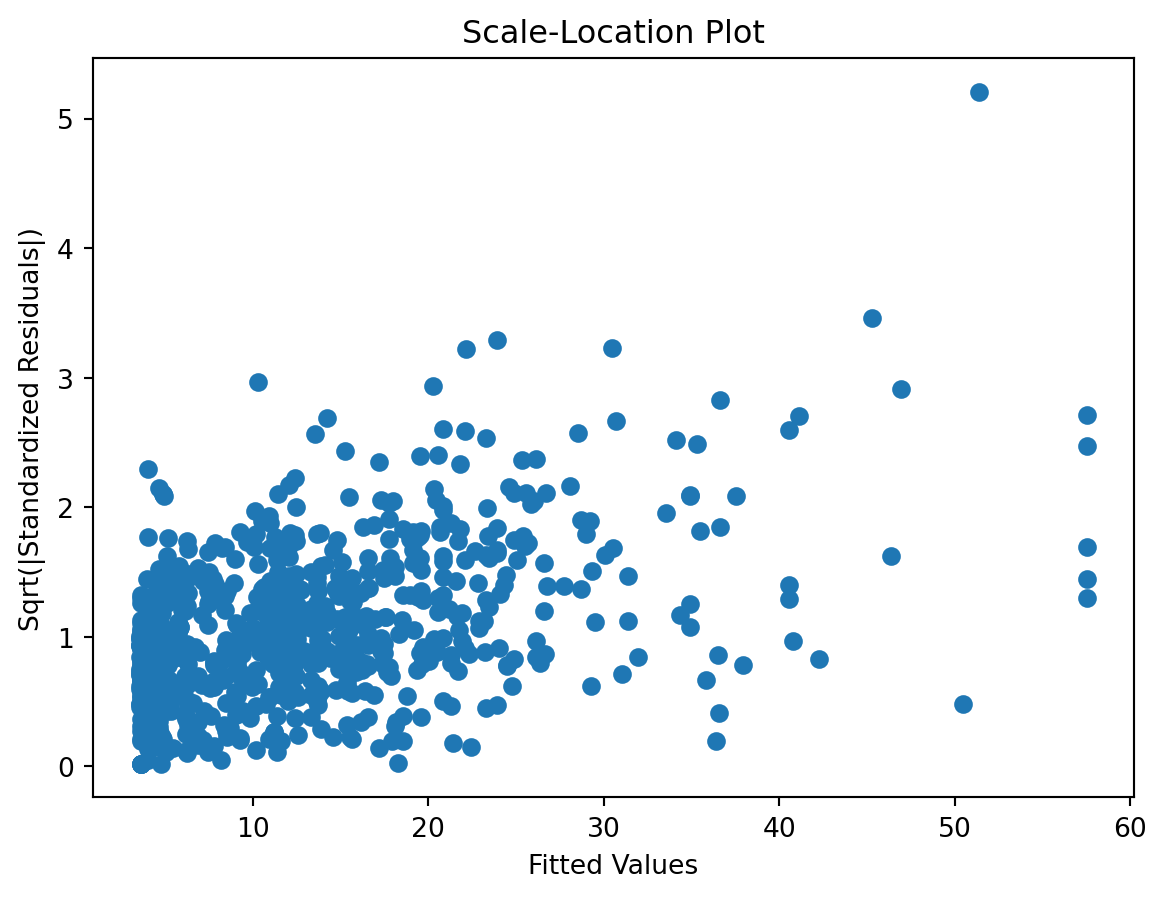

Scale-Location plot

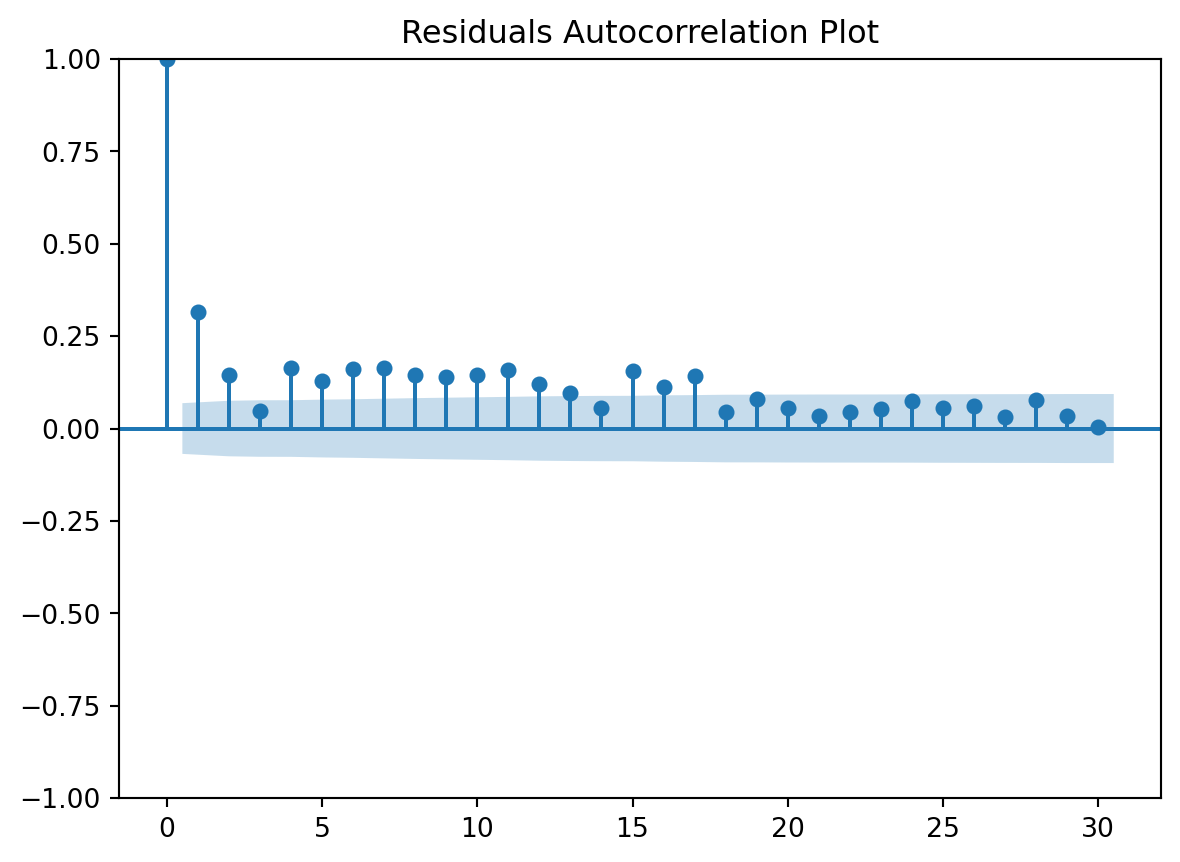

Residuals Autocorrelation Plot

Residuals vs Time

Compare vanilla vs. tuned

Cross-validation messages

♻️ stepit 'cross_validate_pipe': is up-to-date. Using cached result for `strom.modelling.cross_validate_pipe()` 2025-11-24 03:25:54 ♻️ stepit 'cross_validate_pipe': is up-to-date. Using cached result for `strom.modelling.cross_validate_pipe()` 2025-11-24 03:25:54

Metrics

Single split

Metrics based on the test set of the single split

Cross validation

Predictions, residuals, observed

next

Time vs. Predicted and Observed

Time vs. Residuals

Model details

Pipeline(steps=[('vars',

ColumnSelector(columns=['tt_tu_mean', 'rf_tu_mean', 'td_mean',

'vp_std_mean', 'tf_std_mean'])),

('model', GradientBoostingRegressor(random_state=7))])In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Parameters

| steps | [('vars', ...), ('model', ...)] | |

| transform_input | None | |

| memory | None | |

| verbose | False |

Parameters

| columns | ['tt_tu_mean', 'rf_tu_mean', ...] |

Parameters

| loss | 'squared_error' | |

| learning_rate | 0.1 | |

| n_estimators | 100 | |

| subsample | 1.0 | |

| criterion | 'friedman_mse' | |

| min_samples_split | 2 | |

| min_samples_leaf | 1 | |

| min_weight_fraction_leaf | 0.0 | |

| max_depth | 3 | |

| min_impurity_decrease | 0.0 | |

| init | None | |

| random_state | 7 | |

| max_features | None | |

| alpha | 0.9 | |

| verbose | 0 | |

| max_leaf_nodes | None | |

| warm_start | False | |

| validation_fraction | 0.1 | |

| n_iter_no_change | None | |

| tol | 0.0001 | |

| ccp_alpha | 0.0 |

Pipeline(steps=[('vars', ColumnSelector(columns=['tt_tu_mean', 'td_mean'])),

('model',

GradientBoostingRegressor(max_depth=5, min_samples_leaf=5,

min_samples_split=48,

n_estimators=60, random_state=7,

subsample=1))])In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Parameters

| steps | [('vars', ...), ('model', ...)] | |

| transform_input | None | |

| memory | None | |

| verbose | False |

Parameters

| columns | ['tt_tu_mean', 'td_mean'] |

Parameters

| loss | 'squared_error' | |

| learning_rate | 0.1 | |

| n_estimators | 60 | |

| subsample | 1 | |

| criterion | 'friedman_mse' | |

| min_samples_split | 48 | |

| min_samples_leaf | 5 | |

| min_weight_fraction_leaf | 0.0 | |

| max_depth | 5 | |

| min_impurity_decrease | 0.0 | |

| init | None | |

| random_state | 7 | |

| max_features | None | |

| alpha | 0.9 | |

| verbose | 0 | |

| max_leaf_nodes | None | |

| warm_start | False | |

| validation_fraction | 0.1 | |

| n_iter_no_change | None | |

| tol | 0.0001 | |

| ccp_alpha | 0.0 |